Computación e Informática

Adoptability of Test Process Models: ISO/IEC 29119, TMMI y TPI from the small organization perspective

Adoptabilidad de Modelos de Proceso de Pruebas: ISO/IEC 29119, TMMI, TPI, desde la perspectiva de una pequeña organización

Adoptability of Test Process Models: ISO/IEC 29119, TMMI y TPI from the small organization perspective

ReCIBE. Revista electrónica de Computación, Informática, Biomédica y Electrónica, vol. 7, no. 1, pp. 45-64, 2018

Universidad de Guadalajara

This work is licensed under Creative Commons Attribution-NonCommercial-ShareAlike 3.0 International.

Received: 08 February 2018

Accepted: 03 April 2018

Abstract: Testing is considered to be an important stage in the software development process. Therefore, there are different proposals regarding how to perform testing activities at a project level and at organizational level. However, in the context of very small organizations where there are ad-hoc proposals in software and system engineering, the ease of the adoption of influential models such as ISO/IEC 29119, TMMI and TPI is not yet clear. The objective of this work is to compare these models with the characteristics of the resources and finances of small organizations established in the standardization guidelines of ISO. This study conducted a comparative analysis of test process models with respect to the characteristics of very small organizations. A comparative chart was obtained with the answers of the analysis of each model with respect to the criteria considered. It can be inferred from the analysis, that the assessed test process models are not easily adoptable by very small organizations.

Keywords: TMMi, TAMAR, ISO/IEC 29119-2, ISO/IEC 3306, TPI, MPS, software test process model, comparative analysis.

Resumen: La prueba de software es una etapa importante en el proceso de desarrollo de software. Por lo tanto, hay diferentes propuestas sobre cómo realizar actividades de prueba a nivel de proyecto y a nivel organizacional. Sin embargo, en el contexto de organizaciones muy pequeñas donde existen propuestas ad hoc en software e ingeniería de sistemas, la facilidad de adopción de modelos influyentes como ISO/IEC 29119, TMMI y TPI aún no está clara. El objetivo de este trabajo es comparar estos modelos desde las características de los recursos y las finanzas de las pequeñas organizaciones establecidas en las pautas de estandarización de ISO. Este estudio realizó un análisis comparativo de modelos de procesos de prueba con respecto a las características de organizaciones muy pequeñas. Se obtuvo un cuadro comparativo con las respuestas del análisis de cada modelo con respecto a los criterios considerados. Del análisis se puede inferir que los modelos evaluados de procesos de prueba no son fácilmente adoptables por organizaciones muy pequeñas.

Palabras clave: TMMi, TMMi, TAMAR, ISO/IEC 29119-2, ISO/IEC 33063, TPI, MPS, modelo de proceso de prueba de software, análisis comparativo.

1. Introduction

Software testing is a stage of the development process, the application of which is complex in different projects (NIST, 2002). The concept of testing has evolved over time, it developed from a debugging-oriented activity, where the majority of organizations did not differentiate between testing and debugging; to one aimed at preventing (Gelperin & Hetzel, 1988), (Luo, 2001). On the other hand, according to (Meerts, 2016), Myers first publications (Myers, 1979) prepared the scenario for the modern practices of software testing. Trends in the software market impact on the discipline of testing by introducing new elements, such as: agile testing, mobile testing, crowdtesting, test factories, automation testing, context-driven testing (Kulkarni, 2006), among others.

Software testing is also considered a key approach for risk mitigation in the development of software (Swinkels, 2000). However, there are different proposals, such as standards, process models, techniques and process descriptions in various industries that describe how to perform testing at a project level and at an organizational level (García & Dávila, 2012). In a systematic literature review of test process models, (García, Dávila, & Pessoa, 2014) 23 models were found and it was noted that the most reported and used ones are TMMi, proposed by the TMMi Foundation (TMMI Foundation, 2016) and TPI (Visser, et al., 2013), a proprietary process model of the company Sogeti. Finally, the recently published standard ISO/IEC 29119 (ISO/IEC, 2013) can also be included in this group.

On the other hand, the definition of small organizations is not universally accepted, therefore, various institutions around the world, governmental or not, have developed their own definition based on criteria such as number of employees, level of sales and investment, among the most common (ECS, 2010). Some examples of this variety of definitions are: (i) United States. International Trade Commission refers to small and medium-sized enterprises (SMEs) as firms with less than 500 U.S.-based employees (USITC, 2010), or (ii) Official Journal of the European Union (European Union, 2003), which determines a limit of 250 employees for the category of micro, small and mediumsized enterprises (SMEs).

In addition, as noted in (ISO/IEC, 2011), small and micro-businesses are very superior in number to the rest. The software industry is no stranger to this reality and many of the software companies are small (Cernant, Norman-Lopez, & Duch T-Figueras, 2014), (United Nations, 2012), (Laporte, Houde, & Marvin, 2014). In this context, the new family of standards ISO/IEC 29110 - Systems and Software Life Cycle Profiles and Guidelines for Very Small Entities (VSE), defines a small organization, as an enterprise, an organization, a department or a project having up to 25 people (ISO/IEC, 2011).

VSEs have been recognized as an important factor in the economics of the software industry, either producing independent software components or components integrated into larger systems (Laporte, Houde, & Marvin, 2014). VSE needs are often neglected in the development of models and standards recognized in the market. That translates into lack of simplicity, low flexibility and difficulty among other things, for the VSE (ISO/IEC, 2011). It also implies high costs for the adoption of models (ISO/IEC, 2011), which could lead to the exclusion of the VSE from the market and to a distortion of fair competition (ECS, 2010), (Laporte, Houde, & Marvin, 2014).

In the context of the international standardization, guide has been published for writing standards taking into account the needs of micro, small and medium-sized enterprises (ECS, 2010) (ISO/IEC, 2016) and in the field of information technology (IT), several documents of the series ISO/IEC 29110 representing profiles of life cycle processes for systems and software domains, ad-hoc for VSE, has also been published (ISO/IEC, 2011). In particular, the ISO/IEC 29110- 5-1-2 is a profile that presents two processes based on the ISO/IEC 12207 but adapted to the reality of the VSE (ISO/IEC, 2011) for software development. The same is the case with the ISO/IEC 29110-5-6-2, which is based on the ISO/IEC 15288 systems (ISO/IEC, 2014) domain. At a technical level the ISO/IEC 29110- 5-1-2 covers a small set of practices of the ISO/IEC 12207, which can be considered as "indispensable" to ensure the success of small projects. This new effort of the ISO opens the possibility of extending the analysis of the need for models ad-hoc for other processes of software development where there are specialized models (Dávila, 2012). It also recognizes the need for the VSE to have certifications of any kind (in particular process certifications), which represents better opportunities for them to enter the market.

This work aims to perform a comparative assessment of the most representative test process models TMMI, TPI and ISO/IEC 29119 using relevant assessment criteria from the perspective of the VSE. The article is organized as follows: Section 2 presents a description of each of the models to compare; Section 3 shows the criteria used, comparative analysis is shown in Section 4, and finally Section 5 presents a final discussion and future work.

2. Test Process Models

In the software industry, from the perspective of processes, there are two concepts to consider: (i) a process model (of the life cycle) is a framework of processes, activities and artifacts that facilitates communication and understanding of the different ways of organizing them (ISO/IEC, 2010); and (ii) organizational maturity is understood as the degree to which the processes that contribute to the current and future business goals have been implemented consistently across an organization (ISO/IEC, 2015). These concepts provide a 49 frame to the technological development from the perspective of processes and must also be considered for software testing discipline. In a previous work of the authors (García, Dávila, & Pessoa, 2014) various process models of software testing were identified, of which models ISO/IEC 29119, TMMi and TPI are the most representative and are described below:

2.1. ISO/IEC/IEEE 29119-2: Test Processes

The family of standards ISO/IEC/IEEE 29119 is developed by the ISO/IEC in cooperation with IEEE (ISO/IEC, 2013). The parts of the standard published to date are four: (i) concepts (ISO/IEC, 2013), (ii) test process model (ISO/IEC, 2013), (iii) documentation (ISO/IEC, 2013), and (iv) testing techniques (ISO/IEC, 2015). In particular, Part 2 refers to a test process model that describes itself as generic, which can be used as part of any software life cycle model, applied to any test type and to organizations of any size (Reid, 2013). Organizations may not need to use all these processes, so process implementation usually involves a selection of the most suitable set of processes for the specific organization or project (ISO/IEC, 2013) (ISO/IEC, 2008), i.e. an adaptation procedure. However, the commercial pressure to obtain certifications means having to meet certain requirements. There are two ways in which an organization can comply with Part 2 of the standard: full compliance (proving that all the "musts" of all processes are completed), or adapted compliance (the selection and use of certain processes is justified and it is proven that all the "musts" of the selected processes are completed) (ISO/IEC, 2013) but usually this is determined by who offers the certification.

ISO/IEC 29119 includes eight processes, which implies 89 base practices and 40 artifacts according to ISO/IEC 33063 (ISO/IEC, 2015). It is organized into 5 levels of process capability: Performed, Managed, Established, Predictable and Innovating. ISO/IEC 29119 can be seen as a staged or continuous model. The architecture and assessment are presented below.

2.1.1. Architecture of ISO/IEC 29119-2

ISO/IEC 29119-2 classifies the processes into organizational test process group, test management process group and dynamic test process group (ISO/IEC, 2013). Each process is described in terms of purpose, outcome, activities, tasks and information items, as indicated in the guide for processed description ISO/IEC TR 24774 (ISO/IEC, 2013).

2.1.2. Assessment of ISO/IEC 29119-2

According to ISO/IEC 15504-2 (ISO/IEC, 2004) and ISO/IEC 33001 (ISO/IEC, 2015), a process reference model (PRM), as the one described in ISO/IEC/IEEE 29119-2 requires a process assessment model (PAM). A PAM (ISO/IEC, 2004) 50 allows a reliable and consistent assessment of process capability. In addition, a PAM extends a PRM, incorporating indicators to be considered when interpreting the intent of the PRM. ISO/IEC 33063 (ISO/IEC, 2015) provides an example of a PAM which takes as its basis the PRM i.e. ISO/IEC/IEE29119-2, for using in performing a conformant assessment in accordance with the requirements of ISO/IEC 33002 (ISO/IEC, 2015). ISO/IEC 33063 determines a degree of process capability from a set of capability indicators applicable to the PRM under a formal and rigorous process (ISO/IEC, 2015).

2.2. Test Process Improvement TPI®

The process improvement methodology TPI® was developed in 1998 by Koomen and Pol (Tim & Martin, 1999) based on practical knowledge and experience of the test process of Sogeti (Sogeti Web Site, 2015). In 2009 Sogeti publishes TPI Next® (Visser, et al., 2013), an improved version of the previous model. The model offers a viewpoint on the maturity of the test processes within the organization. Based on this understanding the model helps to define gradual and controllable test process improvement steps (Visser, et al., 2013). Below is the architecture and how to evaluate the model.

2.2.1. Architecture of TPI

The TPI model defines 16 Key areas, which are not processes in the strict sense, but together they cover all aspects of the testing process (Visser, et al., 2013) . The key areas are classified into three groups: Stakeholder Relations, Test Management and Test Profession (Visser, et al., 2013) . Every key area can be classified into one of four levels of maturity: Initial, Controlled, Efficient and Optimized. To verify the classification into levels, one or more checkpoints are assigned to each level. If a test process passes all the checkpoints of a certain level, then the process is classified at that level. TPI has 157 checkpoints and 13 pre-defined improvement steps, also called base clusters which have between 10 and 14 checkpoints.

For each key area, the model presents (Visser, et al., 2013): i) an overview of each maturity level of that key area; ii) checkpoints by level; iii) enablers by level; and iv) improvement suggestions by maturity level of the key area. TPI is a staged model; its architecture lays out the steps for improvement or base cluster to increase process maturity. In this type of representation it is assumed that all key areas have the same relevance. For an organization to use the model in a continuous way, a prioritization of key areas based on business goals is required. The checkpoints of the base cluster are re-arranged producing new clusters, and verifying that the dependencies of checkpoints are not transgressed; otherwise another re-arrangement of checkpoints is needed. Finally, each new cluster load is balanced to end up with a similar number of checkpoints.

2.2.2. Assessment of TPI

The testing process in the organization is assessed using the same elements presented in the previous section. TPI proposes a four level maturity model (Visser, et al., 2013): Initial, Controlled, Efficient and Optimized. These maturity levels are achieved in stages, when the 16 key areas are at that maturity level (Visser, et al., 2013). A particular level of maturity can be reached only if the previous level of maturity has been reached (Visser, et al., 2013). To achieve each level, TPI defines the element Cluster (11 clusters in total), in order to establish small improvement steps to the desired level of maturity; and where each cluster consists of a fixed number of checkpoints from several key areas (Visser, et al., 2013).

The model structure allows the organization to implement TPI in a staged or continuous way, the latter means that it is expected that certain key areas contribute more to the business goals than others (Alone & Glocksien). For this, a preliminary analysis of prioritization is required, so the improvement steps are not those set by the model by default, but according to the needs of each organization.

2.3. TMMi: Test Maturity Model Integration

Test Maturity Model Integration is a maturity model developed by the TMMi Foundation (TMMI Foundation, 2016) . According to (Tmmi Foundation, 2012), the main differences with other models are its independence, its compliance with international standards and its complementary relationship with the CMMI maturity model; the latter being a model of software process maturity well positioned in the IT industry (Rasking, 2011).

The terminology used in TMMi is based on the glossary of terms established by ISTQB (International Software Testing Qualifications Board) (ISTQB, 2016), so in this model the tests are considered a dynamic and static activity; and, unlike ISO/IEC 29119-2, it does include static verification techniques such as Reviews. TMMi is a process model with a staged representation: 16 process areas, 173 specific practices, and 12 generic practices organized into five maturity levels: Initial, Managed, Defined, Quantitatively Managed and Optimized. Currently TMMi has not posted a continuous representation of the model. Below is the architecture of TMMi and how to assess it:

2.3.1. Architecture of TMMi

The TMMi model consists of several components which are categorized (Tmmi Foundation, 2012) as: i) required.- components necessary to achieve a certain level of maturity; ii) expected.- components usually performed by organizations to meet a specific process area, although an acceptable alternative can also be 52 recognized by the evaluator; and iii) informative.- such as sub-practices, work products examples, notes, references, etc., to provide ideas to the organization on how to address the required components.

To TMMi, a maturity level is a degree of quality of a testing process of the organization and defines it as an improvement step the organization must follow in stages (Tmmi Foundation, 2012). To reach a level you must meet all the objectives (general and specific) of the desired level and previous levels (Tmmi Foundation, 2012). The model has five levels of maturity that contain fixed process areas. All organizations start at Maturity Level 1 since this does not define objectives to be met (Tmmi Foundation, 2012).

2.3.2. Assessment of TMMi

In 2014, the TMMi Foundation published TAMAR TMMi Assessment Method Application Requirements (Tmmi Foundation, 2009), a set of requirements for implementing an evaluation method based on the process model TMMi. This is an adaptation of the process assessment standard ISO/IEC 15504-2 (ISO/IEC, 2004).

Assessments methods that comply with TAMAR (Tmmi Foundation, 2009) can be accredited by TMMi Foundation, if they meet those requirements. Such methods could be used to identify the strengths and weaknesses of the current testing process and determine rankings based on the TMMi maturity model.

3. Criteria for the Comparative Analysis

In the context of international standards, ISO (ISO/IEC, 2016) as well as the European Committee for Standardization (ECS, 2010) has published a guide providing advice and recommendations to write standards that consider the needs of small businesses. Both documents can be downloaded for free. A Very Small Entity (VSE) is defined as an enterprise, an organization, a department or a project formed by up to 25 people, according to ISO/IEC/29110 (ISO/IEC, 2011). In addition, ISO/IEC 29110-4 (ISO/IEC, 2011) contains in section 6.3 a set of characteristics, needs and suggested competencies for the basic profile of a small organization that develops software. This approach is used to develop new documents related to the series ISO/IEC 29110 (ISO/IEC, 2011).

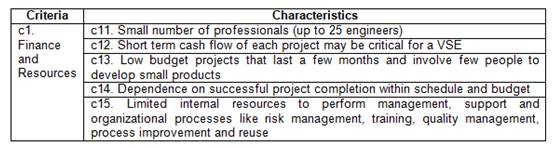

The characterization of VSEs presented in 29110-4 (ISO/IEC, 2011) is classified in four categories: (i) Finance and Resources, (ii) Customer Interface, (iii) Internal Business Processes, and (iv) Learning and Growth. This article introduces a progress on the analysis conducted for the category Finance and Resources. Therefore, information related to other categories has been intentionally omitted. Table 1 shows the criteria and the characteristics that have been considered as 53 the basis for this analysis. The codes that identify each characteristic in the table are used throughout this work to associate or reference them in the analysis.

4. Comparative Analysis

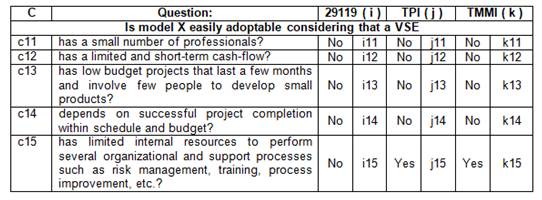

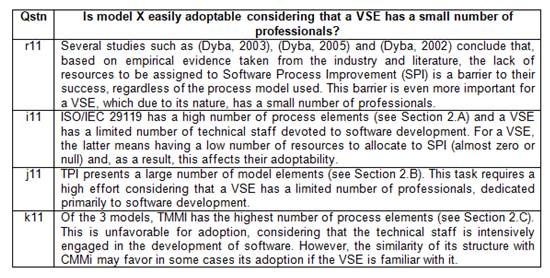

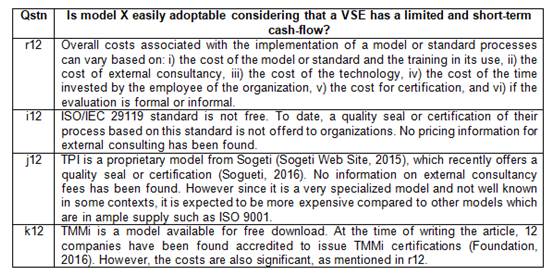

The comparative analysis is a technique introduced in Psychology by Ragin and can be used in Software Engineering for the synthesis of information (Genero Bocco, Cruz-Lemus, & Piattini Velthuis, 2014). In our case, the analysis is conducted by setting a question about the degree of adoption (adoptability) of the discussed models in the context of a VSE, and responding from the model features. The responses have been obtained by expert judgment of software improvement professionals. The “Yes/No” value means that the standard or model meets or does not meet the characteristic. The “NA” value means that it was not enough evidence to make the decision. The analysis is carried out for the criteria and the characteristics listed in Section 3. Summary tables are presented for each criterion and subsequently, argument tables.

ISO/IEC 29110 (ISO/IEC, 2011)

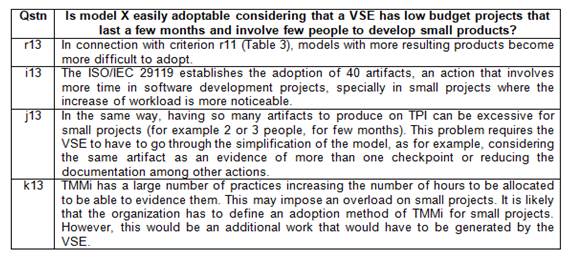

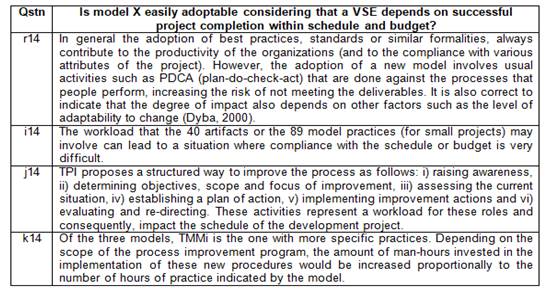

Table 2 provides an overview of the analysis results of the models with respect to the questions of adoptability of each criterion. The first column of this table (labeled “C”) refers to the characteristics, the second column contains the question of analysis and the remaining columns, grouped in pairs, show the Yes / No answers to the questions concerning the analysis of each model. Tables 3, 4, 5, 6 and 7 contain the justification for the criteria c11, c12, c13, c14 and c15, respectively. Each justification table has a front row with the question and a summary of ideas that apply to that feature.

5. Discussion of Results and Future Work

This comparative analysis of the considered test process models reveals directly (and qualitatively) that the considered test process models are not aligned to the underlying needs of a small business with respect to the characteristics of the category Resources and Finance. The main reason is substantially associated to the size that very small entities exhibit (less than 25 professionals) as defined by ISO/IEC 29110-1.

Taking the above-mentioned analysis into consideration, it can be established that the compared models present a high level of abstraction. That is, they are generic models that attempt to cover all the needs of the testing process for different types of tests, different software life cycles and different domains. Therefore, they do not provide a high level of specificity. Whereas this level of abstraction enables adaptability to different contexts, in practice, it turns out to be more complicated for VSEs due to the constraints they present, as reflected in the analysis. As it has occurred in other contexts, the VSEs needs have not been considered explicitly.

One aspect to highlight is that these three models describe themselves as riskbased testing models. The concept of risk is used to define and limit the testing process in the context of scope, cost and schedule. This is an aspect from which the VSE could benefit but it is not easy to perceive since the models are extensive in terms of number of documents and practices that should be covered.

Without impairing what has been stated on the generic aspect of the models, a more appropriate approach for a VSE can be a gradual adoption where a simplified and inexpensive test process model is available for VSEs in early stages. This model should allow to experience the progress quickly and to provide short-term benefits, which can enable the VSE to adopt a culture of quality and continuous improvement on aspects related to software testing processes. Subsequently, the VSE can fully adopt, as a result of its evolution (growth in people and resources), a test process model as the ones presented in the analysis.

Whereas VSE has a minimal infrastructure and limited resources, they can focus on process improvement. The existence of appropriate implementation guides, assessment tools and templates, among others, could be an important enabler for the adoption of the analyzed models. In that regard, TPI offers free downloadable tools such as evaluation matrix and templates presented as a toolkit. For the other two models, ISO/IEC 29119 and TMMi, these tools must be developed by the VSE itself or be made available by communities of interest that support these models.

The need for a test process model suitable for VSEs may be experienced in small contexts such as CMMi (Garcia, 2005), proprietary models as MPS.Br in Brazil (Softex, 2016), MoProSoft in Mexico (NYCE, 2016). At a ISO level, work has been carried out on lifecycle profiles for VSEs that develop software (ISO/IEC, 2011) (ISO/IEC, 2011) (ISO/IEC, 2014). All this indicates a need to make available more appropriate processes for VSEs and supports the view that current models are perceived as costly and complex. A current case of a process model specialized in software testing for VSE is MPT-Br from Brazil (Carvalho, 57 Wanderley, Carneiro, & Honório, 2012). However, there is little evidence of its benefits and results in literature. Another case is the Spanish model TestPAI which claims that it attempts to solve the problem of small and medium Spanish companies that demand a framework for improve testing processes in a simple and inexpensive way, and which proposes a test process model compatible with CMMI (Sanz, Saldaña, Garcia, & Gaitero, 2008). In the case of the latter, there is not sufficient empirical evidence of their results, except for the model validation case study presented by the same authors (Sanz, Saldaña, Garcia, & Gaitero, 2008).

Acknowledgments

This work is framed within ProCal-ProSer Contract N° 210-FINCYT-IA-2013 (Innovate Perú): “Productivity and Quality Relevance Factors in small software development or service organizations adopted ISO standards”, the Software Engineering Development and Research Group and the Department of Engineering of Pontificia Universidad Católica del Perú.

References

Alone, S., & Glocksien, K. (s.f.). Evaluation of Test Process Improvement approaches: An industrial case study. Recuperado el 14 de abril de 2016, de University of Gothenburg: https://gupea.ub.gu.se/bitstream/2077/38986/1/gupea_2077_38986_1.pdf

Carvalho Cavalcanti Furtado, A., Wanderley Gomes, M., Carneiro Andrade, E., & Honório de Farias Junior, I. (27-29 Aug. 2012). MPT.BR: A Brazilian Maturity Model for Testing. 2012 12th International Conference on Quality Software. Xi'an, Shaanxi: IEEE.

Cernant, L., Norman-Lopez, A., & Duch T-Figueras, A. (September de 2014). SMEs are more Important than you think! Challenges and Opportunities for EU Exporting SMEs. DG TRADE Chief Economist.

Dávila, A. (2012). Nueva aproximación de procesos en pequeñas organizaciones de Tecnología de Información. Magazine IEEEPeru. La revista tecnológica de la Sección Perú de IEEE, Vol 1(Nro 2), pp 30-31.

Dyba, T. (December de 2000). An Instrument for Measuring the Key Factors of Success in Software Process Improvement. Empirical Software Engineering, págs. 357-390.

Dyba, T. (December de 2002). Enabling Software Process Improvement: An Investigation of the Importance of Organizational Issues. Empirical Software Engineering, 7, págs. 387-390.

Dyba, T. (2003). Factors of software process improvement success in small and large organizations: an empirical study in the scandinavian context. Proceedings of the 9th European software engineering conference held jointly with 11th ACM SIGSOFT international symposium on Foundations of software engineering (ESEC/FSE-11) (págs. 148-157). New York, USA: ACM.

Dyba, T. (December de 2002). Enabling Software Process Improvement: An Investigation of the Importance of Organizational Issues. Empirical Software Engineering, 7, págs. 387-390.

ECS. (2010). Guidance for writing standards taking into account micro, small and medium-sized enterprises (SMEs) needs. European Committee for Standardization (CEN) and European Committee for Electrotechnical Standardization (CENELEC). Brussels: European Committee for Standardization.

European Union. (20 de May de 2003). Commission Recommendation of 6 May 2003 concerning the definition of micro, small and medium-sized enterprises. Official Journal of the European Union, 46, págs. p. 36–41.

Foundation, T. (s.f.). Find an accredited Assessor. Recuperado el 14 de Abril de 2015, de http://www.tmmi.org/?q=assesor

García, C., & Dávila, A. (2012). Mejora del proceso de pruebas usando el modelo TPI® en proyectos internos de desarrollo con Scrum. Caso Alfa-Lim.

García, C., Dávila, A., & Pessoa, M. (2014). Test process models: Systematic literature review. Software Process Improvement and Capability Determination (págs. 84-93). Springer.

Garcia, S. (2005). Thoughts on Applying CMMI in Small Settings. Pittsburgh: Carnegie-Mellon University. Software Engineering Institute.

Gelperin, D. H., & Hetzel, B. (June de 1988). The growth of software testing. Communications of the ACM., 31(6), 687-695.

Genero Bocco, M., Cruz-Lemus, J. A., & Piattini Velthuis, M. (2014). Métodos de investigación en Ingeniería de Software. Ra-Ma Editorial.

ISO/IEC. (2004). ISO/IEC 15504-2:2003/Cor 1:2004 Information technology -- Process assessment -- Part 2: Performing an assessment. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2008). ISO/IEC 12207:2008 Systems and software engineering -- Software life cycle processes. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2010). ISO/IEC TR 24774:2010 Systems and software engineering -- Life cycle management -- Guidelines for process description. Geneva, Switzerland: International Organization for Standarization.

ISO/IEC. (2011). ISO/IEC 29110-1: Software engineering — Lifecycle profiles for Very Small Entities (VSEs) — Part 1: Overview. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2011). ISO/IEC 29110-4-1:2011 Software engineering -- Lifecycle profiles for Very Small Entities (VSEs) Part 4-1: Profile specifications: Generic profile group. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2011). ISO/IEC TR 29110-5-1-2:2011 Software engineering -- Lifecycle profiles for Very Small Entities (VSEs) -- Part 5-1-2: Management and engineering guide: Generic profile group: Basic profile. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2013). ISO/IEC/IEEE 29119-1:2013 Software and systems engineering — Software testing — Part 1: Concepts and definitions. Geneva, Switzerland: International Organization for Standarization.

ISO/IEC. (2013). ISO/IEC/IEEE 29119-2: Software and systems engineering — Software testing — Part 2: Test process. Geneva, Switzerland: International Organization for Standarization.

ISO/IEC. (2013). ISO/IEC/IEEE 29119-3:2013 Software and systems engineering — Software testing — Part 3: Test Documentation. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2014). ISO/IEC TR 29110-5-6-2:2014 Systems and software engineering -- Lifecycle profiles for Very Small Entities (VSEs) -- Part 5-6-2: Systems engineering -- Management and engineering guide: Generic profile group: Basic profile. Geneva, Switzerland: International Organization for Standarization.

ISO/IEC. (2015). ISO/IEC 33001 Information technology — Process assessment — Concepts and terminology. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2015). ISO/IEC 33002:2015 Information technology -- Process assessment -- Requirements for performing process assessment. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2015). ISO/IEC FDIS 33063 Information technology -- Process assessment -- Process assessment model for software testing. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2015). ISO/IEC TR 15504-7:2008 Information technology -- Process assessment -- Part 7: Assessment of organizational maturity. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2015). ISO/IEC/IEEE 29119-4:2015 Software and systems engineering -- Software testing -- Part 4: Test techniques. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2016). ISO/IEC Guide 17:2016 Guide for writing standards taking into account the needs of micro, small and medium-sized enterprises. Geneva, Switzerland: International Organization for Standardization.

ISO/IEC. (2016). ISO/IEC Guide 17:2016 Guide for writing standards taking into account the needs of micro, small and medium-sized enterprises. Geneva, Switzerland: International Organization for Standardization.

ISTQB. International Software Testing Qualifications Board. (s.f.). Recuperado el 14 de abril de 2015, de http://www.istqb.org

Kulkarni, S. (2006). Test process maturity models–yesterday, today and tomorrow. Proceedings of the 6th Annual International Software Testing Conference, Delhi, India. Citeseer.

Laporte, C., Houde, R., & Marvin, J. (2014). 6.4.2 Systems Engineering International Standards and Support Tools for Very Small Enterprises. INCOSE International Symposium, (págs. 551–569). Las Vegas, NV.

Luo, L. (2001). Software testing techniques. PA: Institute for software research international Carnegie mellon university Pittsburgh.

Meerts, J. (04 de 14 de 2016). http://www.testingreferences.com/testinghistory.php. Obtenido de http://www.testingreferences.com/testinghistory.php.

Mexico, N. (s.f.). Tecnología de la información - Software - Modelos de procesos y evaluación para desarrollo y mantenimiento de software - Parte 2: Requisitos y procesos (MoProSoft). Recuperado el 14 de April de 2015, de http://www.tecnyce.com.mx/index.php/proceso-verif/moprosoft.html

Myers, G. J. (1979). Art of Software Testing. New York, USA: John Wiley & Sons, Inc.

NIST. (2002). The Economic Impacts of Inadequate Infrastructure for Software Testing. Washington: National Institute of Standards & Technology.

Rasking, M. (2011). Experiences Developing TMMi® as a Public Model. 11th International Conference, SPICE 2011 (págs. pp 190-193). Dublin, Ireland: Springer Berlin Heidelberg.

Reid, S. (s.f.). ISO/IEC/IEEE 29119:The New International Software Testing Standards. London UK: Testing Solution Group Ltd.

Sanz, A., Saldaña, J., Garcia, J., & Gaitero, D. (Diciembre de 2008). TestPAI Un área de proceso de pruebas integrada con CMMI. Revista Española de Innovación, Calidad e Ingeniería del Software REICIS, Volumen 4(No 4).

Softex. (s.f.). Melhoria de Proceso do Software Brasileiro. Recuperado el 14 de April de 2015, de http://www.softex.br/mpsbr/

Sogeti Web Site. (14 de April de 2015). Obtenido de SOGETI: http://www.sogeti.com/

Sogueti. (s.f.). TPI NEXT® Process Certification. Recuperado el 14 de April de 2015, de http://www.sogeti.es/globalassets/spain/soluciones/testing/certificacion-tpi-next-sogeti.pdf

Swinkels, R. (2000). A comparison of TMM and other test process improvement models.

Tim , K., & Martin, P. (1999). Test Process Improvement: A practical step-by-step guide to structured testing. Addison Wesley Longman INC.

TMMI Foundation. (04 de 06 de 2016). Recuperado el 14 de Abril de 2015, de http://www.tmmi.org/

Tmmi Foundation. (2012). Test Maturity Model TMMi Release 1.0. Ireland: Erik van Veenendaal .

Tmmi Foundation. (s.f.). TMMi Assessment Method Application Requirements (TAMAR) version 2.0. Andrew Goslin .

United Nations. (2012). Information Economy Report 2012 - The Software Industry and Developing Countries. En L. Cernat (Ed.), United Nations Conference on Tradea and Development UNCTAD, (pág. 14). New York and Geneva.

USITC. (2010). Small and MediumSized Enterprises: Characteristics and Performance. Washington, DC 20436: United States International Trade Commission. Publication 4189.

Visser, B., de Vries, G., Linker, B., Wilhelmus, L., van Ewijk, A., & van Oosterwijk, M. (2013). TPI® NEXT - Business Driven Test Process Improvement (1 edition (November 17, 2009) ed.). UTN Publishers

Author notes

Alternative link

http://recibe.cucei.udg.mx/revista/vol7-no1/computacion03.pdf (pdf)